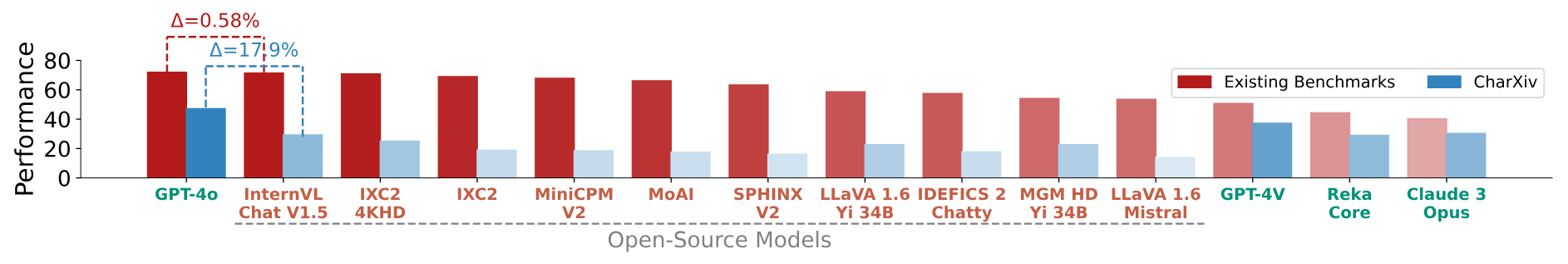

We evaluate general-purpose MLLMs on CharXiv and provide a leaderboard for the community to track progress. Note that all models are evaluated in a zero-shot setting with a set of natural instructions for each question type. The numbers below are based on the model performance on the validation set, which consists of 1,000 charts and 5,000 questions in total.